A subtle AI failure

Imagine your AI-powered lead scoring model quietly drops your highest-value customer segment from sales outreach for 3 weeks.

Move the slider: how serious would this be in your context? Watch how your risk exposure shifts.

Think of one AI use case you sponsor. Would a quiet failure be worse if it affected: customers, employees, or regulators?

The Roadmap to Governance

You do not need to read code to govern AI risk. You do need a clear language and playbook.

This lesson tracks three layers of your role with AI: identify, prioritize, and mitigate risks.

- Lifecycle hotspots

- Business impact translation

- Prioritizing controls

- Zero surprises

- Clearer team communication

- Audit readiness

Choose your role to tailor the context:

The AI Lifecycle

Risk isn't just a deployment problem. It exists at every stage of development.

Click the button below to reveal the specific "hotspots" where risk tends to concentrate in the development process.

- Problem framing: Misaligned objectives.

- Data: Bias, drift, and weak consent.

- Model: Overfitting and lack of robustness.

- Deployment: Poor guardrails.

- Monitoring: Failure to detect abuse.

The Four Pillars of Risk

Most governance frameworks cluster AI risk into patterns you can reason about without a math degree.

- Performance: Low-quality outputs.

- Fairness: Unequal group impact.

- Security: Prompt injection/leakage.

- Compliance: Regulatory breaches.

Which patterns match your incident idea?

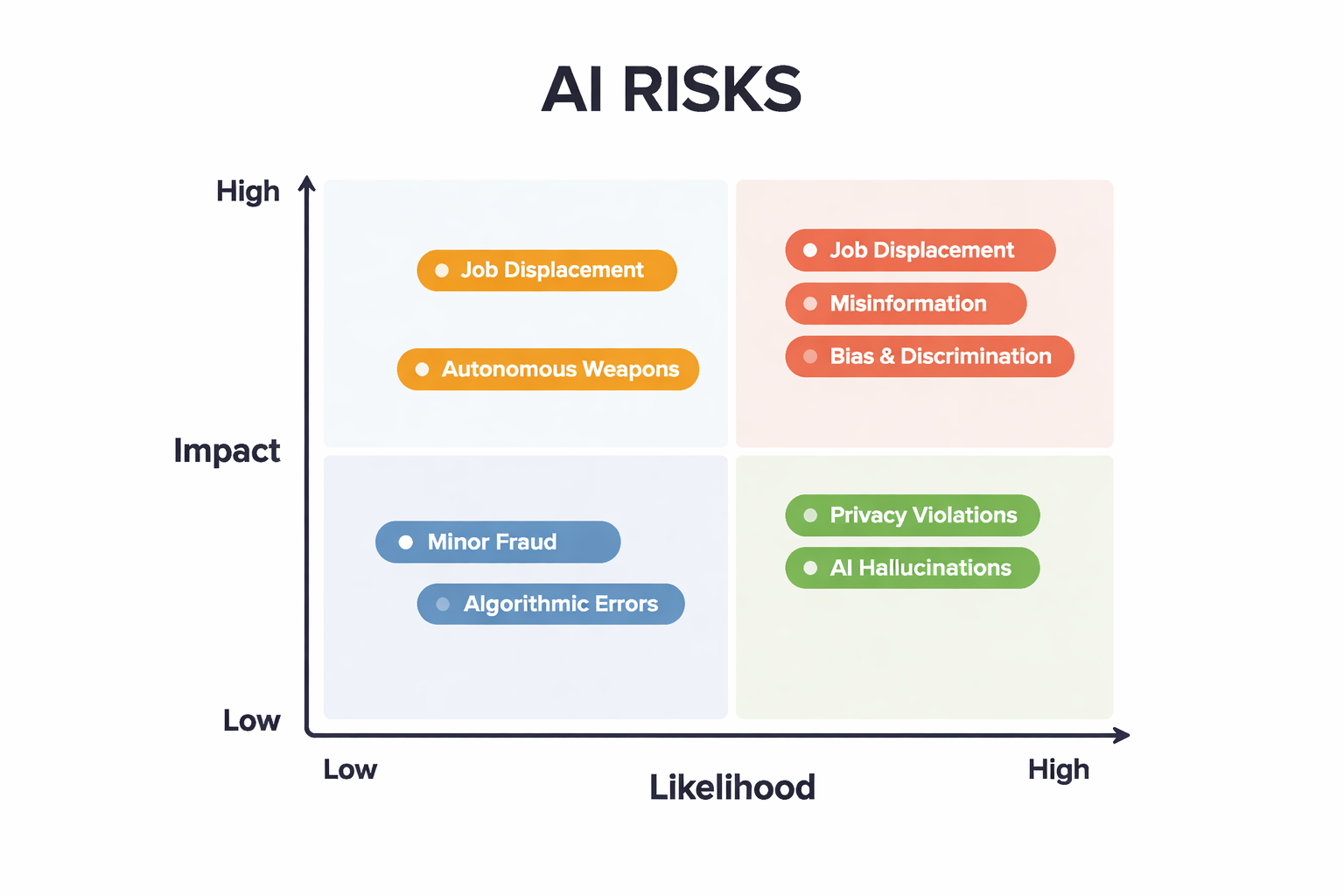

The Risk Heatmap

To prioritize, leaders use a simple Likelihood x Impact matrix to decide where to focus resources.

Worth tracking closely; ensure owners are defined.

Check Your Thinking

Where is the earliest place you can reduce AI risk?

Governance Levers

You don't just "hope" for safe AI. You pull specific governance levers to enforce safety standards.

Click a lever to see how it applies to your business context.

The Control Tiers

Not every model needs the same level of rigor. Scale your controls to the stakes of the use case.

- Baseline: Logging, owner, basic testing.

- Elevated: Bias checks, human review, change logs.

- High risk: Formal approvals, red-teaming, audits.

If the use case is "High" on your matrix or touches regulated data, always aim for the High Risk tier.

Prioritizing Controls

Which situation most clearly justifies high-risk AI controls?

Who Owns What?

Clear ownership stops critical issues from falling into the cracks between departments.

The Incident Playbook

Incidents are inevitable; surprise and confusion are optional. Prepare your team now.

Leaders need clarity on: detection thresholds, notification paths, and "kill switch" procedures.

- Triage: Confirm impact scope.

- Stabilize: Roll back or pause.

- Learn: Root cause analysis.

Key Takeaways

You're ready to lead AI risk conversations with confidence.

- Lifecycle View: Risk starts at the "problem framing" stage.

- Prioritization: Use Likelihood x Impact to budget attention.

- Tiers: Match control depth to the stakes.

- Playbooks: Define incident response before you need it.

Final Assessment

Apply what you've learned to check your proficiency.

4 scenario-based questions. 80% score required for certification.

Assessment Q1

Where should you first look to reduce fairness risk in a support ticket prioritization model?

Assessment Q2

A model has low likelihood but very high impact if it fails. How should you treat it?

Assessment Q3

Which control set best fits a regulated underwriting AI tool?

Assessment Q4

What is the best leadership action when a hiring AI shows bias?